Information Architecture

- Define the damned thing

- Make AI less wrong

- Champion AI as a tool for thinking, not thought.

- Figure out your own personal taxonomy of AI. Let’s talk about GenAI or Artificial General Intelligence or Large Language Models as appropriate. Don’t fall into the trap of saying just “AI” when you want to talk about a specific AI technology. To get you started with some AI taxonomies in progress, try here, here, and here.

- Get clear on the risks of AI, and the nuanced risks for each type of AI. Define what AI does well, and what it does badly. Follow Ethan Mollick, Kurt Cagle, Mike Dillinger, and Emily Bender, for a start. They’ll point the way to more experts in the field.

- Talk with clients and colleagues about AI definitions. Help them get clear on what they mean when they say “AI.”

- Help out with projects like “Shape of AI,” which is creating a pattern library of AI interactions.[3] For instance, GenAI interfaces can’t just be an empty text box. IAs know that browse behavior is a necessary complement to search behavior. How do we ensure that’s part of a GenAI experience?

- Create and distribute resources like this Chat GPT Cheat Sheet to improve the ability of people to use GenAI experiences.

- Think about what a resource might look like that listed and evaluated use cases for AI. How might we help people understand how to use AI better?

- ontology - an accurate representation of the world, which feeds:

- knowledge graphs - structured, well-attributed, and well-related content; which needs:

- content strategy - understanding what content is needed, what’s inaccurate or ROTting, what’s missing, and how to create and update it; which needs:

- user experience - to understand what the user needs and how they can interpret and use AI output.

-

Bob wanted us to pump the brakes on building new AI experiences, but I think that’s pretty unlikely at this point. Sorry, Bob. ↩

-

Our inability to define our own profession aside, of course. Exception that proves the rule, etc. ↩

-

I learned about this project on Jorge Arango’s podcast, The Informed Life. If you’re not already following Jorge, what are you waiting for? ↩

-

Rachel’s full talk is available on her site. ↩

- humility - the ability to say “I don’t know”

- judgment — the ability to say “that’s not possible” or “that’s not advisable” or “that’s a racist question”, or to evaluate and question data that doesn’t seem right[2]

- the ability to learn from feedback mechanisms like pain receptors or peer pressure

- sentience - the ability to feel or experience through senses

- cognition - the ability to think

-

This is called pareidolia. ↩

-

At IAC24, Sherrard Glaittli and Erik Lee explored the concept of data poisoning in an excellent and entertaining talk titled “Beware of Glorbo: A Use Case and Survey of the Fight Against LLMs Disseminating Misinformation” ↩

- Summarizing documents

- Synthesizing information

- Editing written content

- Translation

- Coding

- Categorization

- Text to speech // Speech to text

- Search

- Medical research and diagnosis

- Brainstorming, problem-solving, and expanding ideas

- Understanding and incorporating diverse experiences

- Generating pragmatic titles with good keywords

- Proofreading documents

- Creating project schedules and realistic time estimates

- Outlining and initial content structuring

- Process some supplied text in a specific way according to my directions. I’ll then review the output and do something with it. (And possibly feed it back to the AI for more processing.)

- Output some starting text that I can then process further according to my needs.

- Generative AI creates content in response to prompts.

- Content AI processes and analyzes content, automating business processes, monitoring and interpreting data, and building reports.

- Knowledge AI extracts meaning from content, building knowledge bases, recommendations engines, and expert systems.

-

Per Tesler’s Theorem, apparently misquoted. ↩

-

General AI and Super AI are still theoretical. ChatGPT, Siri, and all the other AI agents we interact with today are considered Narrow AI. ↩

- Information Ecosystems

- Information Ecologies

- We need better tools

- The new spirit of IA is, as Christian Crumlish put it: Third Wave IA - or Resmini-Hinton-Arango IA - the high level stuff; what is this, what does it do, what is going on in the user’s brain?

- "We look at the present through a rear view mirror. We march backwards into the future." - Marshall McLuhan

- Do what you do best, and link to the rest. -Jeff Jarvis

- "Man is a being in search of meaning" -Plato

- "Language is infrastructure" - Andrew Hinton

- "Un esprit nouveau souffle aujourd'hui" (There is a new spirit today) -Le Courbusier

- "Cyberspace is not a place you go to but rather a layer tightly integrated into the world around us." - Andrea Resmini

- "IA is focused on the structural integrity of meaning" - Jorge Arango

- "Once there was a time and place for everything: today, things are increasingly smeared across multiple sites and moments in complex and often indeterminate ways." W. J. Mitchell

- "Christina Wodtke makes me brave. She says 'Here, put this in your mouth.'" -Abbe Covert

- "The difference between our websites and a ginormous fungus is the the fungus has maintained it’s integrity." -Lisa Welchman

- "We live in Einsteinian physics, where things are both a particle and a wave." -Lisa Welchman

- "There are usually political barriers to solving information problems on sites. They’re not IA problems." - Lisa Welchman

- "I’m doing more IA and UX now as a product manager than I ever did when I was a UX designer" - Donna Lichaw

- "We may be confused, but we have a community of confusion" - Bryce Glass

- "Mathematicians have a saying: Problems worthy of attack prove their worth by fighting back." -Karl Fast

- "Discovery is not a phase, It is an ongoing activity" -Kerry-Anne Gilowey

- Define the boundaries of your domain

- Where objects touch existing models, use them instead of replicating them

- If canonical content pages already exist on your website for domain objects, link to them

- Don't have more than one page covering the same topic

- broken down into discrete concepts

- classified as real world things and relationships

- metadata: a structure readable by robots and people

- Never duplicate coverage within your own content (one page per thing)

- Do what you do best and link to the rest

- Create chunks based on the granularity of the model

- Be realistic about which parts of the model you can expose

- Structured content breaks subjects into things and relationships

- It begins by talking to experts and users to understand their world

- The mental model becomes a content model for your whole team

- Your content needs to be atomized to align to the model

- Supporting content may be hiding in your business silos

- "One page per thing" makes content easier to manage and link to

- Make the best content available for your subject

- Focus each page around a single topic

- Structure your content with metadata

- Link as much as you possibly can

- Old model was buy, install, reuse (software)

- New model is discover, use, forget (experience)

- Information technology

- Info organization and design

- Written / graphical language

- Spoken language

- Perception / cognition

- Ecological - how animals perceive/relate to environment

- Semantic - people communicating with people

- Digital - digital systems transmitting to and receiving from other digital systems

- we perceive elements in the environments as invariant (persistent) or variant (in flux)

- we perceive the environment in human-scale terms, not scientific abstractions

- we perceive the environment as “nested”, not in logical hierarchy

- Affordance: “the perceived functional properties of objects, places, and events in relation to an individual perceiver.”

- The built world

- Compounds

- Buildings

- Bounding forms

- Construction materials

- The noosphere

- Information ecosystem

- Product / system

- Node clusters

- Individual nodes

- a crossroads of intent and embodiment

- a foundation for cross channel place-making

- a tool for building immersive, purpose-driven organization systems based on embodied experience

- Complexity

- Multiplicity

- Postdigital

- Architecture

- NIEM - National Information Exchange Model

- HL7

- Good Relations

- Eyes not size - it’s about the amount of stuff that has passed by my eyes

- Emotional - Has psychic weight, psychological debt

- Complex problem

- Messy

- We look for answers before we understand the questions.

- We make decisions too early

- We ignore things we can’t make fit

- We keep going through the motions

- We end up solving the wrong problem

- Be honest

- Be confident (we need to solve the right problem)

- Communicate early and often

- Stay calm. It’s contagious

- Have a healthy fear of commitment

- Collaborate (with your client)

- Commit to the work, not the deliverables

- We reduce the chance of complaints

- We make social media a positive force

- Beyond the Brain, by Stanislav Grof

- Cognition in the Wild, by Edwin Hutchins

- The Ecological Approach to Visual Perception, by James J. Gibson

- The Ethnographic Interview, by James Spradley

- The Extended Mind, by Richard Menary

- Ghost in the Shell (movie)

- Performance Studies: An Introduction, by Richard Schechner

- Pervasive Information Architecture, by Andrea Resmini and Luca Rosati

- Strategy and Structure: Chapters in the History of the American Industrial Enterprise, by Alfred D. Chandler

- Supersizing the Mind, by Andy Clark

- System Esthetics, by Jack Burnham

- "The answer needs to be crafted to the person asking the question." - Mike Crandall

- "It depends; who are you creating value for? It depends on what YOU want to do with it." - Mario Sanchez

- "Information management is a bridge between users and technical implementers, between aspiration and reality, between what's unrealized and what's possible." -Unknown

- "We focus on the critical social, psychological, human side of organization systems." - Bob Mason

- "We're in the communications business, and our tools are processes as well as technology. We manage the ecology of information." - Bob Larsen

- "I achieve results efficiently." - Jason Robertson

Information Architecture in the Age of AI, Part 4: The IA-powered AI Future

or: I, For One, Welcome Our New Robot Overlords

This is the fourth in a four-part series of posts on IA and AI, mainly inspired by talks at IAC24. Read Part 1, Part 2, and Part 3.

“As artificial intelligence proliferates, users who intimately understand the nuances, limitations, and abilities of AI tools are uniquely positioned to unlock AI’s full innovative potential.” -Ethan Mollick, Co-Intelligence

As we’ve seen in the previous posts in this seires, AI is seriously useful but potentially dangerous. As Kat King put it, AI is sometimes a butter knife and sometimes a bayonet.

It’s also inevitable. As Bob Kasenchak pointed out at IAC24, AI has become a magnet for venture capital and investment, and companies are experiencing major FOMO; nobody wants to be left behind as the GenAI train pulls out of the station.[1]

So, if that’s true, what do we do about it? Specifically as information architects: what does the IA practice have to say about an AI-spiced future? I think we need to do what we’ve always done: make the mess less messy and bring deep, systemic thinking to AI-ridden problems.

In short, we need to:

Define the damned (AI) thing

As I suggested in Part 1 of this series, AI needs to be better understood, and no one is better at revealing hidden structures and defining complex things than information architects.[2] Let’s work as a community to understand AI better so that we can have and encourage better conversations around it.

To do that, we need to define the types of AI, the types of benefits, the types of downsides, and the use cases for AI. In addition, catalogs of prompts and contexts, lists of AI personas, taxonomies for describing images, and so forth – all easily accessible – would help improve the ability of users to interact with LLM-based AI agents.

Here’s a starter list of things any of us could do right now:

Make AI less wrong

Y’all, this is our moment. As IAs, I mean: the world needs us now more than ever. LLMs are amazing language generators, but they have a real problem with veracity; they make up facts. For some use cases, this isn’t a big issue, but there are many other use cases where there’s real risk in having GenAI make up factually inaccurate text.

The way to improve the accuracy and trustworthiness of AI is to give it a solid foundation. Building a structure to support responsible and trustworthy AI requires tools that IAs have been building for years. Meaning things like:

As Jeffrey MacIntyre said at IAC24, “Structured data matters more than ever.” As IAs, our seat at the AI table is labeled “data quality”.

To get there, we need to define the value of data quality so that organizations understand why they should invest in it. At IAC24, Tatiana Cakici and Sara Mae O’Brien-Scott from Enterprise Knowledge gave us some clues to this when they identified the values of the semantic layer as enterprise standardization, interoperability, reusability, explainability, and scalability.

As an IA profession, we know this is true, but we’re not great about talking about these values in business terms. What’s the impact to the bottom line of interoperable or scalable data? Defining this will solidify our place as strategic operators in an AI-driven world. (For more on how to describe the value of IA for AI, pick up IAS18 keynoter Seth Earley’s book “The AI-Powered Enterprise,” and follow Nate Davis, who’s been thinking and writing about the strategic side of IA for years.)

Finally, as Rachel Price said at IAC24, IAs need to be the “adults in the room” ensuring responsible planning of AI projects. We’re the systems thinkers, the cooler heads with a long-term view. In revealing the hidden structures and complexities of projects, we can help our peers and leaders recognize opportunities to build responsible projects of all kinds.[4]

AI as a tool for thinking, not thought

In 1968, Robert S. Taylor wrote a paper titled “Question-Negotiation and Information Seeking in Libraries.” In it, he proposed a model for how information-seekers form a question (an information need) and how they express that to a reference librarian. Taylor identified the dialog between a user and a reference librarian (or a reference system) as a “compromise.” That is, the user with the information need has to figure out how to express their need in a way that the librarian (or system) can understand. This “compromised” expression may not perfectly represent the user’s interior understanding of that need. But through the process of refining that expression with the librarian (or the system), the need may become clarified.

This is a thinking process. The user and the librarian both benefit from the process of understanding the question, and knowledge is then created that both can use.

In his closing keynote at IAC24, Andy Fitzgerald warned us that “ChatGPT outputs things that LOOK like thinking.” An AI may create a domain model or a flow chart or a process diagram or some other map of concepts; but without the thinking process behind them, are they truly useful? People still have to understand the output, and understanding is a process.

As Andy pointed out, the value of these models we create is often the conversations and thinking that went into the model, not the model itself. The model becomes an external representation of a collective understanding; it’s a touchstone for our mental models. It isn’t something that can be fully understood without context. (It isn’t something an AI can understand in any sense.)

AI output doesn’t replace thinking. "The thinking is the work,” as Andy said. When you get past the hype and look at the things that Generative AI is actually good for – summarizing, synthesizing, proofreading, getting past the blank page – it’s clear that AI is a tool for humans to think better and faster. But it isn’t a thing that thinks for us.

As IAs, we need to understand this difference and figure out how to ensure that end users and people building AI systems understand it, too. We have an immensely powerful set of tools emerging into mainstream use. We need to figure out how to use that power appropriately.

I’ll repeat Ethan Mollick’s quote from the top of this post: “As artificial intelligence proliferates, users who intimately understand the nuances, limitations, and abilities of AI tools are uniquely positioned to unlock AI’s full innovative potential.”

if we understand AI deeply and well, we can limit its harm and unlock its potential. Information Architecture is the discipline that understands information behavior, information seeking, data structure, information representation, and many other things that are desperately needed in this moment. We can and should apply IA thinking to AI experiences.

Epilogue

I used ChatGPT to brainstorm the title of this series and, of course, to generate the images for each post. Other than that, I wrote all the text without using GenAI tools. Why? I’m sure my writing could have been improved and I could have made these posts a lot shorter if I had I fed all this in to ChatGPT. I know it still would have been good, even. But it wouldn’t have been my voice, and I don’t think I would have learned as much.

That’s not to say I have a moral stance or anything against using AI tools to produce content. It’s just a choice I made for this set of posts, and I’m not even sure that it was the right one. After all, I’m kind of arguing in this series that the responsible use of AI is what we should be striving for, not that using it is bad or not using it is good (or vice versa). But I guess, as I said above, echoing Andy Fitzgerald, I wanted to think through this myself, to process what I learned at IAC24. I didn’t want to just crank out some text.

I do believe that with the rise of AI-generated text and machine-generated experiences, there’s going to be an increasing demand for authentic human voices and perspectives. You can see, for example, how search engines are becoming increasingly useless these days as more AI-generated content floods the search indexes. Human-curated information sources may become more sought-after as a result.

Look, a lot of content doesn’t need to be creative or clever. I think an AI could write a pretty competent Terms of Service document at this point. No human ever needs to create another one of those from scratch. But no GenAI is ever going to invent something truly new, or have a new perspective, or develop a unique voice. And it is never going to think. Only humans can do that. That’s still something that’s valuable.

So, use GenAI. Use it a lot. Experiment with it and figure out what it’s really good at. I think that’s our responsibility with these tools: to understand them. But don’t forget to use your own voice, too. No AI is going to replace you, but they might just make you think faster and better. Understanding AI and using it to make a better you… that’s the best of all outcomes.

Information Architecture in the Age of AI, Part 3: The Problems With GenAI

(Image by DALL-E. Prompt: An impressionistic image representing ‘the problems of generative AI.’ The colors are dark and muted, creating a moody and foreboding atmosphere. The scene is a dark alley in a gritty, noir-style city. A shadowy figure with a hat and trench coat lurks as if waiting for his next victim.)

This is the third in a four-part series of posts on IA and AI, mainly inspired by talks at IAC24, the information architecture conference. Read Part 1 and Part 2.

In this post, I’m riffing on the work of several IAC24 speakers, predominantly presentations by Emily Bender and Andrea Resmini.

In Part 2 of this series, I looked at the benefits of Generative AI. The use cases at which GenAI excels are generally those in which the AI is a partner with humans, not a replacement for humans. In these cases, GenAI is part of a process that a human would do anyway and where the human is ultimately in control of the output.

But GenAI output can have numerous issues, from hallucinating (making up false information) to generating content with biases, stereotypes, and other subtle and not-so-subtle errors. We can be lulled into believing the AI output is true because it’s presented as human-like language, so it seems like a human-like process created it. But although GenAI can create convincing human-like output, at its core it’s just a machine. It’s important to know the difference between what we think AI is and what it actually is, and between what we think it’s capable of and what it’s actually capable of.

AI isn’t human

Here is basically what Generative AI does: it makes a mathematical representation of some large corpus of material, such as text or images. It then makes new versions of that material using math. For example, if you feed an LLM (large language model) a lot of examples of text and have it run a bunch of statistics on how different words relate to each other, you can then use those statistics to recombine words in novel ways that are eerily human-like. A process called fine-tuning will teach the LLM the boundaries of acceptable output, but fine-tuning is necessarily limited and can’t always keep GenAI output error- or bias-free. (And the process may introduce new biases.) LLMs produce statistically-generated, unmoderated output that sounds human-generated.

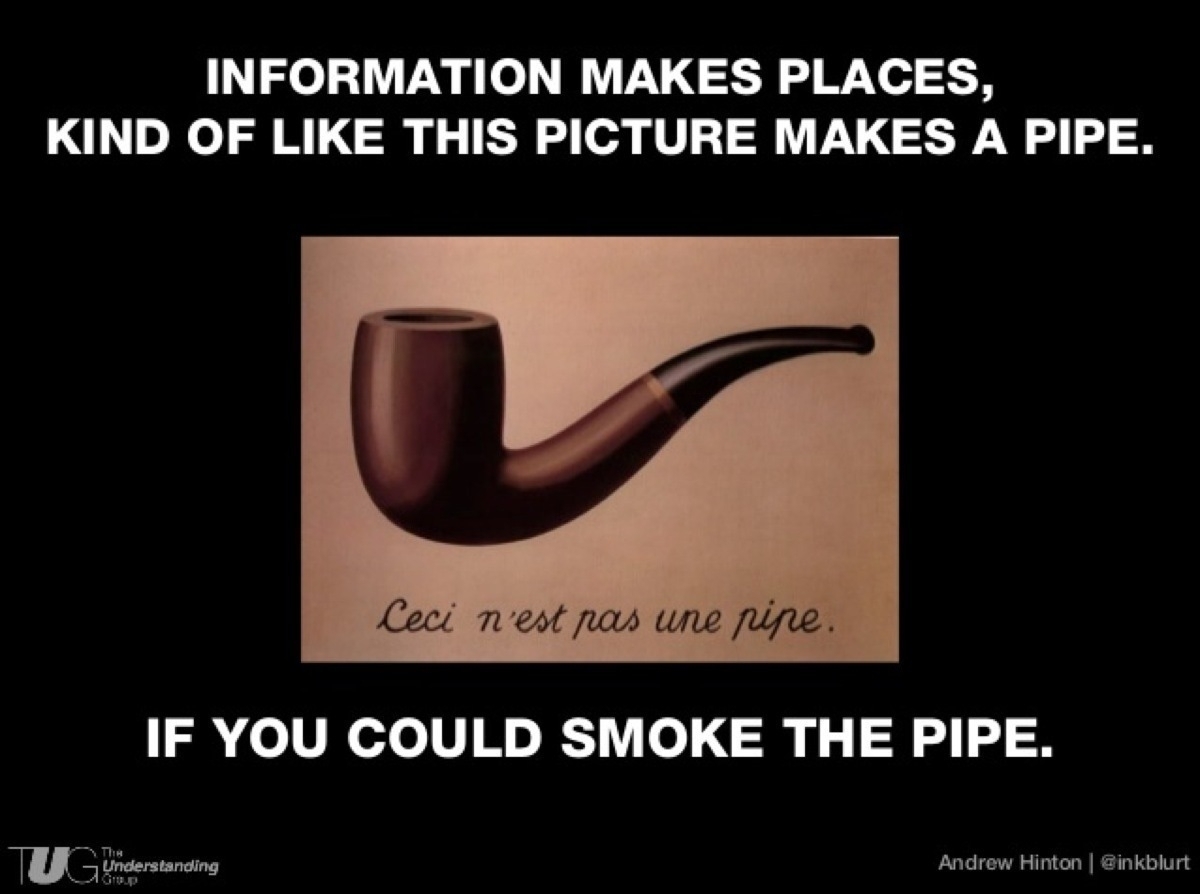

Therein lies the main problem: Generative AI produces human-like output while not being human, or self-aware, or anything like an actual thinking thing. As Andrea Resmini put it in his talk at IAC24, “AI is technology that appears to be intelligent.” Emphasis on “appears.” Since GenAI output seems human but isn’t, it tricks our brains into believing that it’s more reliable and trustworthy than it actually is.

Humans want to see human qualities in everything. We anthropomorphize; we invest objects in the world with human attributes. We see human faces in things where they don’t exist.[1] We get easily wrapped up in the experience of seeing human qualities in other things and forget that the thing itself is just a thing; we’ve projected our own experience onto it.

It’s not too dissimilar from experiencing art. We bring meaning — our filters, our subjective experience — to the experience of art. We invest art with meaning. There is no experience of art without our participation in it. Your experience of a piece of culture might be very different from mine because of our different life experiences and thinking patterns and the different ways we interact with the work.

In the same way, when we participate in AI experiences we invest those experiences with meaning that comes from our unique subjective being. GenAI isn’t capable of delivering meaning. We bring the meaning to the experience. And the meaning we’re bringing In the moment is based on how we’ve learned to interact with other humans.

In a recent online article, Navneet Alang wrote about his experience of asking ChatGPT to write a story. He sought an explanation for his sensation of how human-like the story felt:

Robin Zebrowski, professor and chair of cognitive science at Beloit College in Wisconsin, explains the humanity I sensed this way: “The only truly linguistic things we’ve ever encountered are things that have minds. And so when we encounter something that looks like it’s doing language the way we do language, all of our priors get pulled in, and we think, ‘Oh, this is clearly a minded thing.’”

AI simulates human thought without human awareness

Attributing human thought to computer software has been going on long enough to have a name: The ELIZA Effect. ELIZA was an early computer program that would ask simple questions in response to text input. The experience of chatting with ELIZA could feel human-like – almost like a therapy session – though the illusion would eventually be broken by the limited capacity of the software.

GenAI is a way better ELIZA, but it’s still the same effect. When we impute human qualities to software, we’re making a category error: we’re equating symbolic computations with the ability to think.

GenAI can simulate the act of communicating intent and make statements that appear authoritative. But, as Dr. Bender said in her IAC24 keynote, AI doesn’t have intent. It can’t know what it’s saying, and it’s very possible for it to say something very confidently that is very wrong and possibly very harmful.

GenAI engines lack (at least) these important human qualities:

Those last points are really important: Computers can’t think and they can’t feel. They can’t know what they’re doing. They are machines made of code that can consolidate patterns of content and do some fancy math to recombine those patterns in novel ways. No more. And it’s right to be skeptical and maybe even scared of things that can’t reason and have no empathy but that can imitate external human behavior really well. They are not human, just human-like.

And so here’s the problem: if we confuse the human-like output from a GenAI engine with actual human output, we will have imputed all sorts of human-like qualities to that output. We will expect it to have passed through human filters like judgment and humility (because that’s what a human would do, and what we’re interacting with appears human). We will open ourselves up to accepting bad information because it sounds like it might be good. No matter that we might also get good information, if we’re not able to properly discern the bad information, we make ourselves vulnerable to all sorts of risks.

AI training data, however, is human… very, very human

So, human-like behavior without human thought behind it is a big problem with GenAI. Another major problem has to do with the data that’s been used to train the large language models GenAI engines are based on. And for the moment I’m setting aside the ethical questions around appropriating people’s work without notice or compensation. Let’s just focus for now on the issue of data quality.

GenAI scales the ability to create text and images based on what humans have already created. It does this by ingesting vast quantities of human-created content, making mathematical representations of probabilities inherent in that content, and then replaying those patterns in different combinations.

Through this process, GenAI reflects back to us a representation of ourselves. It’s like looking in a mirror in a well-lit bathroom, in a way, because it reveals not only our best qualities but also our flaws and biases. As Andrea Resmini pointed out, data is always dirty. It’s been created and edited by humans, and so it has human fallibility within it. Whatever reality was inherent in the training data will be reflected in the GenAI output. (Unless we take specific steps to hide or moderate those flaws, and those don’t always go well.)

If GenAI is sometimes a bathroom mirror, it’s also sometimes a funhouse mirror, distorting the interactions that it produces due to deficiencies in the training data (and because it is not self-aware and can’t correct itself). For any question that matches a sufficient amount of training data, the GenAI agent can sometimes give a reasonably accurate answer. Where there is little or no training data, a GenAI agent may make up an answer, and this answer will likely be wrong.

It’s garbage in, garbage out. Even if you get the occasional treasure, there’s still a lot of trash to deal with. How do we sort out which is which?

Context cluelessness and psychopathy

So, a GenAI engine might give you a response that’s 100% correct or 0% correct or anywhere in between. How do you know where on the spectrum any given response may lie? Without context clues, it’s impossible to be sure.

When we use search engines to find an answer to a question, we get some context from the sites we visit. We can see if it’s a source with a name we recognize, or if obvious care has been taken to craft a good online experience. And we can tell when a site has been built poorly, or is so cluttered with ads or riddled with spelling and grammar errors that we realize we should move on to the next source.

GenAI engines smooth all of its sources out like peanut butter on a slice of white bread, so that those context clues disappear into the single context of the AI agent.

And if the agent is responding as if it were human, it’s not giving you human clues to its veracity and trustworthiness. Most humans feel social pressure to be truthful and accurate in most situations, to the best of their abilities. A GenAI agent does not feel anything, much less social pressure. There is nothing in a GenAI engine that can be motivated to be cautious or circumspect or to say “I don’t know.” A GenAI agent is never going to come back to you the next day and say, “You know, I was thinking about my response to you and I think I might have gotten it wrong.”

Moreover, a human has tells. A human might hedge or hesitate, or look you in the eye or avoid your gaze. A GenAI agent won’t. Its tone will be basically the same regardless of the truth or accuracy of its response. This capacity to be completely wrong while appearing confident and authoritative is the most disturbing aspect of GenAI to me.

As GenAI gets more human-like in its behaviors it becomes a more engaging and convincing illusion, bypassing our BS detectors and skepticism receptors. As a result, the underlying flaws in GenAI become more dangerous and more insidious.

Are there even more problems with GenAI? Yeah, a few…

I’ve focused here on some high-level, systemic issues with GenAI, but there are many others. I would encourage you to read this Harvard Business Review article titled “AI’s Trust Problem” for an excellent breakdown of 12 specific AI risks, from ethical concerns to environmental impact. If AI’s lack of humanity alone isn’t enough to make you cautious about how you use it, perhaps the HBR article will do the trick.

All that said…

For all its faults, Generative AI is here to stay, like it or not. Businesses are gonna business, and we humans love a technology that makes our lives easier in some way and damn the consequences. And, as I pointed out in Part 2, GenAI can be truly useful, and we should use it for what it’s good at. My point in going through the pros and cons in such detail is to help set up some structure for how to use GenAI wisely.

So, if we accept that this type of AI is our new reality, what can we do to mitigate the risks and make it a better, more useful information tool? Is it possible information architects have a big role to play here? Stay tuned, dear reader… These questions and more will be answered in the final part of this series, coming soon to this very blog.

Information Architecture in the Age of AI, Part 2: The Benefits of Generative AI

(Image by DALL-E. Prompt: Create an image representing the concept “the benefits of generative AI.” The style should be semi-realistic. Colors should be bright. Include happy humans and robots working together, unicorns, rainbows, just an explosion of positive energy. The image should embrace positive aspects of AI. No text is necessary. The setting is a lush hillside.)

This is the second in a four-part series of posts on IA and AI, mainly inspired by talks at IAC24, the information architecture conference. Read Part 1 here.

Before I get into the problems with Generative AI (GenAI), I want to look at what it’s good for. As Jorge Arango has pointed out, there was a lot of hand-wringing and Chicken-Littleing about GenAI at IAC24 – and justifiably so – yet not a lot of acknowledgement of the benefits. So let’s look at the useful things that GenAI can do.

In her IAC24 talk “Structured Content in the Age of AI: The Ticket to the Promised Land?,” Carrie Hane offered the following list of things that Generative AI could do well:

This seems like an excellent starting point (I might only add image generation to the above). We might want to caveat this list a bit, though. For one thing, we should remain skeptical on the use of GenAI in specific professional applications such as medical research and coding, at least until we’ve had time to see if we can poke holes in the extraordinary claims coming from AI researchers. Despite the legitimate promise of these tools, we’re still learning what AI is actually capable of and how we need to use it. There may well be good uses for these tools in industry verticals, but we’re almost certainly seeing overblown claims at this point.

For another thing, as Carrie would go on to point out, in many cases getting useful, trustworthy output from GenAI requires some additional technological support, such as integrating knowledge graphs and RAG (retrieval augmented generation). These technologies have yet to become well understood or thoroughly integrated in AI models.

In a recent online course, Kirby Ferguson listed some additional uses for GenAI:

These use cases fall into one of two patterns:

In other words, we’re not handing control of anything to the AI engine. We’re using it as a tool to scale up our ability to do work we could have done without the AI. GenAI is a partner in our work, not a replacement.

I don’t think you can overstate the real benefits of these use cases. For rote, repetitive work, or for things like research or analysis that’s not novel and is relatively straightforward but time-consuming, GenAI is a real boon. As long as there’s a human taking responsibility for the end product, there are a lot of use cases where GenAI makes sense and can lead to significant productivity and quality-of-life gains.

And a lot of the use cases listed above are like that: time-consuming, boring, energy-draining tasks. Anything that relieves some of that mental drudgery is welcome. This is why GenAI has gained so much traction over the past year or so: because it’s actually quite useful.

I want to be clear: there’s a lot of hype around AI, and a lot of its benefits have been overstated or haven’t been studied enough to understand them thoroughly. There are a lot of claims around AI’s utility in everything from drug manufacturing, to medical diagnosis, to stopping climate change, to replacing search engines, to replacing whole categories of workers. Many – maybe most – of these claims will turn out to be overblown, but there will still be significant benefits to be had from the pursuit of AI applications.

A frequent comparison to the hype around Generative AI is the hype a decade ago about autonomous vehicles. Full self-driving turns out to be really, really hard to accomplish outside of certain very narrow use cases. Autonomous vehicles that can go safely anywhere in any conditions may be impossible to create without another generational shift in technology. But the improvements to the driving experience that we’ve gained in pursuit of fully autonomous driving are real and have made operating a car easier and safer.

I think it’s likely that AI – Generative AI, in particular – will turn out to be more like that: a useful tool even if it falls far short of today’s breathless predictions.

So, GenAI excels when it’s used as a thinking tool, a partner working alongside humans to scale content generation. And like any tool that humans have ever used, we have to use GenAI responsibly in order to realize the full benefits. That means we have to understand and account for its real, serious weaknesses and dangers. In Kat King’s words, we have to understand when AI is a butter knife and when it’s a rusty bayonet. In Part 3 of this series, we’ll contemplate the pointy end of the bayonet.

Information Architecture in the Age of AI, Part 1: The Many Faces of AI

(Image by DALL-E)

I’m not sure where I am in the race to be the last person to write about IAC24, but I have to be pretty close to leading the pack of procrastinators. The annual information architecture conference was held in my home city of Seattle this year, and despite not having to travel for it, it’s taken me a while to consolidate my thoughts.

No matter. It’s not about being first or last, just about crossing the finish line. So here I am, like a pokey yet determined walker in a marathon, presenting the first of a four-part series of posts on IA and AI, mainly inspired by presentations and conversations at IAC24 and things I’ve noticed since then as a result of better understanding the field.

In this first part, like a good information school graduate, I argue for better definitions of AI to support better discussions of AI issues. The second part covers what Generative AI seems to be useful for, and the third part is about the dangers and downsides of Generative AI. Finally, I’ll look at the opportunities for the information architecture practice in this new AI-dominated world, and I’ll suggest the proper role for AI in general (spoiler: the AI serves us, not the other way around).

Note: I used ChatGPT to brainstorm the title of the series and to create a cover photo for each post. Other than that, I wrote and edited all the content. I leave it as an exercise to the reader to decide whether an AI editor would have made any of this better or not.

The many faces of AI

Let’s start with some definitions.

AI is not a single thing. Artificial Intelligence is a catchall term that describes a range of technologies, sub-disciplines, and applications. We tend to use the term AI to apply to cutting-edge or future technologies, making the definition something of a moving target. In fact, “AI Effect” is the term used for a phenomenon where products that were once considered AI are redefined as something else, and the term AI is then applied to “whatever hasn’t been done yet”[1].

So, let’s talk a bit about what we mean when we say “AI”. As I learned from Austin Govella and Michelle Caldwell’s workshop “Information Architecture for Enterprise AI,” there are at least three different types of AI:

Most of us are probably barely aware of the second two types of AI tools, but we know all about the first one. In fact, when most of us say “AI” these days, we’re really thinking about Generative AI, and probably ChatGPT specifically. The paradigm of a chat interface to large language models (LLMs) has taken up all the oxygen in AI discussion spaces, and therefore chat-based LLMs have become synonymous with AI. But it’s important to remember that’s just one version of machine-enhanced knowledge representation systems.

There are other ways to slice AI. AI can be narrow (focused on a specific task or range of tasks), general (capable of applying knowledge like humans do), or super (better than humans).[2] It can include machine learning, deep learning, natural language processing, robotics, and expert systems. There are subcategories of each, and more specific types and applications than I could list here.

The different types of AI can also take different forms. Like the ever-expanding list of pumpkin-spiced foods in the fall, AI is included in more and more of the digital products we use, from photo editors to writing tools to note-taking apps to social media… you name it. In some cases, AI is a mild flavoring that gently seasons the UI or works in the background to enhance the software. In other cases, it’s an overwhelming, in-your-face, dominant flavor of an app or service.

As IAs, we need to do a better job helping to define the discrete versions of AI so that we can have better discussions about what it is, what it can do, and how we can live with it. Because, as Kat King pointed out in her talk “Probabilities, Possibilities, and Purpose,” AI can be used for good or ill. Borrowing a metaphor from Luciano Floridi, Kat argued that AI is like a knife. It can be like a butter knife – something that is used as a tool – or like a rusty bayonet – something used as a weapon.

In containing this inherent dichotomy, AI is like any other technology, any other tool that humans have used to augment our abilities. It’s up to us to determine how to use AI tools, and to use them responsibly. To do this, we need to stop using the term “AI” indiscriminately and start naming the many faces of AI so that we can deal with each individually.

There are some attempts at this here, here, and here, and the EU has a great white paper on AI in the EU ecosystem. But we need better, more accessible taxonomies of AI and associated technologies. And, as IAs, we need to set an example by using specific language to describe what we mean.

So, in that spirit, I’m going to focus the next two parts of this series specifically on Generative AI, the kind of AI that includes ChatGPT and similar LLM-powered interfaces. In Part 2, I’ll talk about the benefits of GenAI, and in Part 3, I’ll look at GenAI’s dark side.

What is Information Architecture?

Looking for a comprehensive overview of information architecture? Look no further. This curated list of articles and resources from the fine folks at Optimal Workshop is a brilliant reference.

Dewey's biased decimal system

Check out this week’s Every Little Thing podcast. It’s nominally about the Dewey Decimal System, but it’s really about the political nature of organizing things and how the organizing systems we create reflect our biases. (Starts 9-minutes in.)

YADIA: “IA defines spatial relationships and organizational systems, and seeks to establish hierarchies, taxonomies, vocabularies, and schema—resulting in documentation like sitemaps, wireframes, content types, and user flows, and allowing us to design things like navigation and search systems.”

from Sara Wachter-Boettcher. “Content Everywhere”

Glushko's definition of IA

Yet Another Definition of IA (YADIA):

“The activity of Information Architecture [is] designing an abstract and effective organization of information and then exposing that organization to facilitate navigation and information use.”

-from The Discipline of Organizing, by Robert Glushko

Partly to test this blog’s linkage with micro.blog, I thought I’d mention that I’ve been digging back into Designing the Search Experience for inspiration lately. So much good stuff in there about how to turn information behavior studies into practical design solutions. Two words: search modes!

Information Everywhere, Architects Everywhere

The following is adapted from my opening remarks at World IA Day Seattle 2016, which took place on Saturday, February 20. The theme for World IA Day this year was: Information Everywhere, Architects Everywhere.

If you've been around the information management world for any length of time, you've probably heard the joke about the old fish and the young fish. The old fish says "Water's fine today". And the young fish says, "What's water?"

I didn't say it was a good joke.

But it is useful as a shorthand for explaining something about what information is. We're like the fish, obviously, and information is all around us. We're swimming in it, but we don't even notice it until we learn to see it.

How much information did you encounter last week? This morning? Since you started reading this? I'll bet you couldn't quantify the amount of information around you on any time scale. The room you're in is information, the street outside, the words you're reading, the clothes we're wearing... every sight, smell, sound, and surface carries information, and we process it all in an instant and without even noticing that we're doing it.

We live in a universe of information. And most of the time we can, like the young fish, just swim in it and go about the business of being. But sometimes, we want to shape and form information into something intentional and meaningful, into a web site, an intranet, an app, a monument, or some other information experience. At those moments, when information is both the medium and the message, we must notice the information all around us and attempt to make it meaningful to ourselves and others. We must apply design. We must practice information architecture.

Now, I imagine a variation of the joke about the fish where in this version the old fish says to the young fish: "I'm a fish." And the young fish says, "What's a fish?"

It's still not a good joke.

But I think we encounter something like this when we try to explain to our friends, family, colleagues, and bosses that we're information architects. When I tell someone I'm an information architect, I get something of a blank stare. For the longest time I tried to figure out how to break through that and come up with a cool way of explaining what I do ("I'm like a ninja, but with information."), but I'm starting to lose hope that I'll come up with the right words.

After all, everyone's something of an information architect. Everyone organizes something: closets, movie collections, garages, files on the computer, kitchens, bookshelves... you name it. We all try to impose some sort of order on the world, to create systems that make sense and keep on making sense, and impart some sort of meaning to others. We're all fish. I mean, we're all architects.

It's just that, for those of us who are crazy enough to voluntarily identify ourselves as "information architects", we're doing more than organizing our spice racks or shoe closets. We are doing the same thing, essentially, except we're attempting to do it at scale. We're trying to impose order on thousands and millions of items of information at a time, for users who may number in millions or billions. And these days we're usually trying to do it within a window the size of an index card.

And there's something so interesting about that to me. It seems like a fraught enterprise: doomed yet noble, and occasionally elegant and beautiful. There is information everywhere. And there are architects everywhere. But the rare breed who call themselves information architects are lucky enough to recognize these things; to understand that this is water, and we are fish.

And to be able to know that is pretty damned cool.

The Unexpected Virtue of Metadata

It's really hard to get people to understand why it's worth investing in metadata and taxonomy projects. The benefits aren't immediate and the reasons can seem esoteric. It's only after the work is done that the usefulness of metadata starts to become clear.

Proof of this comes in this interview with a colleague of mine at REI. This is a quote I’m going to pull out at every metadata and taxonomy meeting from now on:

“[Collecting metadata] turned out to be really smart. We didn't realize the repercussions of it when we did it. But the structured way we captured the meta-data and user-generated content (UGC) laid the groundwork for how we use that content." (My emphasis.)

I had nothing to do with the decision to collect metadata in this instance, but I've seen firsthand the powerful unintended benefits of having robust structured content. Perhaps one way to convince others ahead of time that they should invest in proper content markup is to collect more testimonials and stories like these. If you know of any others, let me know in the comments.

Selling Information Architecture

In my opinion, IA is not something that needs to be sold. IA is already inherent to whatever someone is working on or has in place. If you are making something, you will be tackling the IA within and around it. With or without me you will “do IA.”

I guess in sales speak we could say “IA is included for free in all projects” — because a system without an information architecture does not exist. Rather than selling information architecture, I find that I do have to “explain” what it is and why it matters so that it can be worked on and improved upon (not ignored or inherited which is all too often the case)

Whether you're interested in "selling" IA or not, the fact is you'll probably have to explain what you do to others. Probably multiple times. Per day. You could do worse than have a few of Abby's scripts memorized.

Notes from the 2013 IA Summit, Baltimore, MD

Last week I attended the IA Summit in Baltimore. It was my first IA Summit and I'm very glad I decided to go. I met so many intelligent, thoughtful, and passionate practitioners (and academics) of information architecture, and I found myself inspired and challenged to raise my game and do better work.

The following are some of my rough notes from the five days of the conference. While this is short on narrative, I hope there are enough nuggets of wisdom and links to explore that you'll find something new to think about.

Themes

Some of the major concepts that kept cropping up for me:

Quotes

Wednesday, April 3, 2013

Academics and Practitioners Round Table: Reframing Information Architecture

"Un esprit nouveau souffle aujourd'hui" (A new spirit breathes [lives] today)

"Un esprit nouveau souffle aujourd'hui" (A new spirit breathes [lives] today)

Andrea Resmini introduced the day by putting the current information ecology in perspective for us. We have moved from a world where computing was done in a specific time and place to a world where computing is ever-present. "Cyberspace is not a place you go to but rather a layer tightly integrated into the world around us."

For example, the average citizen in the EU spends 29.5 hours computing each week. The average citizen in the UK spends 39 hours computing each week.

The day was structured around groups of Ignite-style talks, with discussion and an exercise after each.

Talks for Part 1

David Fiorito: The Cultural Dimensions Of Information Architecture.

Culture is a learned and shared way of living; we are creating culture through IA

David Peter Simon: Complexity Mapping

We need to develop device-agnostic content.

We think a lot about how content will be presented (different forms like tablets, watches, google glasses) but we don't think so much about how information will be presented on those new forms.

Simon Norris

"Man is a being in search of meaning" -Plato

Take a meaning-first approach to IA. Start with meaning

Matt Nish-Lapidus: Design For The Network: The Practice Of 21st Century Design And IA

The Romans built road networks to spread their cultural software. We build networks to spread a different type of cultural software.

Lev Manovich talks about the Cultural Interface: "…we are no longer interfacing to a computer but to culture encoded in digital form …" (Lev Manovich, The Language of New Media (2001))

We need a new design practice for the networked world, one that embraces humans, technology, craft, and interface.

Culture is moving faster and faster, moving up in the pace layer diagram

Recap and discussion from Part 1

Was Le Corbusier an IA? He was part of our history, one of the people whose shoulders we stand on, but his work must be understood in the context of his time. Calling him an IA wouldn't be fair.

Does the IA create meaning by designing which resource is linked, or is there no way to create meaning because there are so many hyperlinks that users create their own meaning?

Christina Wodtke suggested developing a "Poetics of information architecture"

Talks for Part 2

(Note: I missed a speaker here, but I think it was Duane Degler)

Sally Burford: The Practitioners of Web IA Reviewed some research into The practice of IA at small and large organizations. IA needs to develop an identity, to take a strong stance

Jason Hobbes and Terrence Fenn: The IA of Meaning-Making

Defining the problem defines the solution; this is the problem/solution ecology

The IA design deliverable is resolving the problem/solution ecology

Recap and discussion from Part 2

What's missing around IA in the academic community?

There is no written history of IA. (Some suggested a book by Earl Morrow (?), but others thought even that book was out of date).

It's hard to have a career in academia when the term IA is not understood.

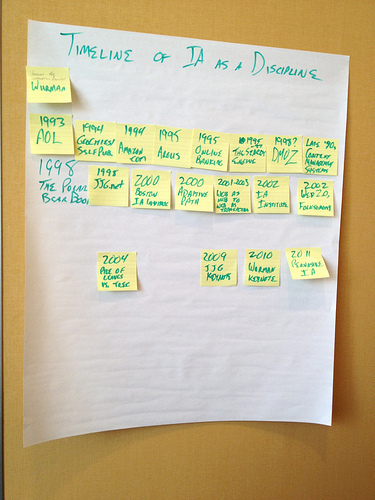

Activity: create a timeline of IA

Talks for Part 3

Dan Klyn: Dutch Uncles, Ducks, And Decorated Sheds

Meaning should be the center of our reframe of IA

Don't bake meaning into structure; keep them separate

Jorge Arango: "Good Fit" In The Design Of Information Spaces

Jorge developed themes from Alexander's Notes On The Synthesis Of Form.

The designer has to create a mental picture of the actual world. In really complex problems, formal representations of the mental model need to be created.

Andrew Hinton: A Model for Information Environments

IA has always been architecture of a dimension of a shared reality.

We should continue working on reframing IA by thinking of it as a design problem.

Recap and discussion from Part 3

IA is about solving a problem.

Activity - List The Schools Of IA Thought

Thursday, April 4, 2013

Workshop - Modeling Structured Content

From background presentation for the workshop:

Don't reinvent, link!

Shared model + shared language + shared understanding = consistent user experience

From the workshop:

Structured content refers to information or content that has been broken down and classified using metadata.

Knowledge rejects rigid structure.

When we use the same language to describe the same things, we can build a web of knowledge across various services.

Structured content breaks down information into things and the relationships between them. A content model maps our subject domain, not our website structure or content inventory. Assertions distinguish between real-world things and the documents that refer to them.

Experts map the world, users mark points of interest.

Modeling begins with research, talking to experts and users to understand and articulate the subject. Ubiquitous language describes the granular terms that will be used by everyone on the project. Boundary objects show where your subject model can connect to neighboring models.

Content is hard.

Everything starts with good content. Content is not the stuff we pour into nicely designed containers. It's not the stuff we chase up from the client at the last moment. It is not a placeholder. Content is the whole damn point.

"Do what you do best, and link to the rest." -Jeff Jarvis

The link forces specialization and specialization demands quality

Other parts of your organization may be sitting on a goldmine of content or business data. Find it and exploit it.

The BBC's principles:

Wrap Up

Friday, April 5, 2013

Keynote: Beyond Mobile, Beyond Web: The Internet of Things Needs Our Help

We often walk right past the next big thing.

Blog post: Bears, Bats, and Bees

We are in a "model crisis"

We need a Google for your room. A personal search service for the things that are important to you.

The World is the Screen: Elements of Information Environments (slides)

We live in both the digital and physical environments simultaneously.

We need to look at how we comprehend environments generally in order to understand how we understand digital environments.

Pace layers of information environments:

Information in three modes

Traditional cognitive theory assumes the brain is like a computer

Embodied cognition says that the brain uses the body and the environment to understand

Some key ideas from James J. Gibson’s theories

Semantic information changes how we experience environment

"Language is a form of cognitive scaffolding." - Andy Clark, Supersizing the Mind

The body and the ecological information can override the semantic information, as in a door that looks like a push door but has a sign that says pull.

Digital information enables pervasive semantic place-making

The 7th Occasional IA Slam

A design presentation for the American Society for Immortalization Science and Technology

Poster Sessions & Reception

Saturday, April 6, 2013

Links, Nodes, and Order: a unified theory of information architecture (slides)

We need a better way to define what good IA is. And we need better ways to communicate about IA.

In architecture, simple forms can be recombined in different forms to create new forms.

Forms are made up of and can contain other forms

Nodes and links are our form and space

Nodes are our basic building blocks, and also the walls and buildings themselves

Nodes can contain and are made up of other nodes

Link: the relationship between nodes

There is a Form/Space Hierarchy (most complex at the top:)

Similarly, there is a Node/Link Hierarchy (most complex at the top:)

We need to apply different approaches to different levels of the node/link hierarchy

IA is focused on the structural integrity of meaning.

Taxonomy for App Makers (slides)

Taxonomy is rhetorical, place-making, and tied to embodiment

Mobile taxonomy is device independent

Mobile taxonomy is articulated

Mobile taxonomy is flexible

Taxonomies are goal-driven. They are rhetorical

Rhetoric - the means by which we inform, persuade, of motivate particular audiences in specific situations

Architecture is rhetoric for spaces

Taxonomy - a method of arrangement conceived to create a particular kind of understanding

Taxonomy is

Ghost in the Shell: Information Architecture in the Age of Post Digital (slides (from a previous version of this talk))

This was a rich and thought-provoking talk, but difficult to summarize. The major themes were:

There is a new spirit. It’s not towards order but towards disorder and multiplicity

A spirit of context, place and meaning

A spirit of sense-making, … and creating new places for humans to work and play

Web Governance: Where Strategy Meets Structure (slides)

Peter Morville and Lisa Welchman

Big governance vs, local governance

There’s a lot of stuff happening at the local [organizational] level, but few people if anyone who are looking at the overall picture.

There are usually political barriers to solving information problems on sites. They’re not IA problems.

The governance of your web:

Your “stable environment” equals clear sponsorship, goals, and accountability.

Your “good genes” are goals are policy-driven, standards-based framework

These things will allow your web to grow and still be recognizable as your web. That’s all it is.

Enterprise web governance ensures the proper stewardship of an organizational web presence.

Stewardship, not ownership.

Using Abstraction to Increase Clarity (slides)

Abstraction - the intentional filtering of information to focus on core goals

We must carefully choose the model through which we hold conversations. Abstractions help stakeholders discover.

Metadata in the cross-channel ecosystem: consistency, context, interoperability

Metadata enables consistency, context, and interoperability.

A cross channel experience requires sending information across channels.

“Messages have no meaning” - Andrea Resmini

… But every message has a structure

Standards:

Ontology is things and their relationships. When you have a concept of the space and you express it in a way that can be interchanged, you have an ontology.

Building the World’s Visual Language

Scott leads The Noun Project

Types of written languages:

Pictographic. Ideographic. Syllabic. Phonetic.

Sunday, April 7, 2013

Product Is the New Big IA

Christian Crumlish, Bryce Glass, Donna Lichaw

The panelists discussed moving out of IA and UX and into product roles.

Donna - "I’m doing more IA and UX now as a product manager than I ever did when I was a UX designer"

Christian - my titles have changed, but I’ve been basically doing the same thing: defining the damn thing of the product, connecting people and ideas.

Donna - A product manager is like a producer: they conceive the vision and get people moving in the same direction

Donna - In New York, no one has any idea what a product manager is

Bryce - "We may be confused, but we have a community of confusion"

Christian- Third wave IA - or Resmini-Hinton-Arango IA - the high level stuff; what is this, what does it do, what is going on in the user’s brain

Christian - There’s a clearer career path for the people who are in charge of product. There’s more headroom.

The Big Challenges of Small Data

Xerox PARC identified a disease: biggerism

Solving the big problem seems more interesting, more exciting, more challenging

Small data problems: a large personal library, a desktop full of icons, a gmail inbox

Big data is about stuff that’s too big for anyone to understand

Small data is about:

… And yet we focus on the app, not the coordination of the data

Organizations have big data problems

People have small data problems

Mathematicians have a saying: Problems worthy of attack prove their worth by fighting back.

We have small data problems worth working on. We are hitting the edges of search. Where’s the difference between searching and filtering? We have faceted search and faceted navigation. Which is it?

Problems are framed by how we think about the work we do. [I would say they’re also framed by the tools we have. -sm]

We need to make significant positive progress on a hard problem. We need to not be distracted by biggerism.

Schrödinger’s IA: Learning To Love Ambiguity

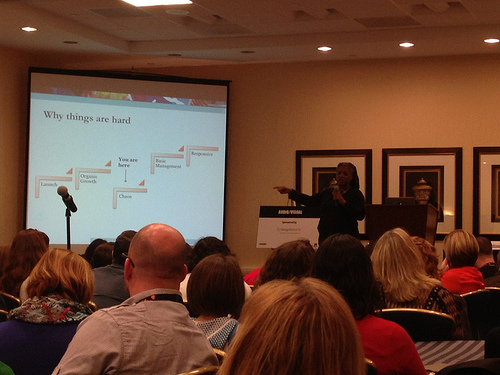

When we go in to a project with a personal methodology…

We need ambiguity tolerance

Discovery is not a phase, It is an ongoing activity

Problem solving is squiggly

Designing For Failure: How Negative Personas And Failed User Journeys Make A Better Website (slides)

Classic personas are the users we want to come to the site. We give them happy paths.

At the other end of the scale are anti-personas, people we want to discourage. (People who intend harm, people who are doing things with the service we don’t want, people who are too young)

Negative personas are not the primary audience, but may still come and be disappointed. Are we giving them what they need?

We’re not designing for everyone. But we need to acknowledge and handle pain points. In order to increase the number of satisfied customers.

Does it matter? Yes

You can’t control who comes to the site, so you have to try just to not annoy them.

Design for success or failure.

Appendix

Books, Articles, and Movies

Blog Posts and Artifacts

VRM and Information Management

So I'm a wee bit late posting this, but I was working on a poster presentation for this year's IA Summit when I came across the notes I took for my presentation at InfoCamp Seattle 2012. It's now posted here for internet posterity.

InfoCamp intro to VRM (PDF)

I thought this might be interesting for two reasons. First, I think the central message of the presentation is still relevant: information management professionals should be at the forefront of thinking about how people identify, store, and manage their own data in a VRM-enabled world. The PDF roughs out the main ideas of VRM and suggests how information management professionals might contribute to this emerging movement.

(Update 3-22-13: Holy smokes! I didn't realize that Tracy Wolfe, the Mod Librarian, posted a nice writeup of this presentation back in October. I really need to start paying attention to this whole internet thing. I hear it's really taking off.)

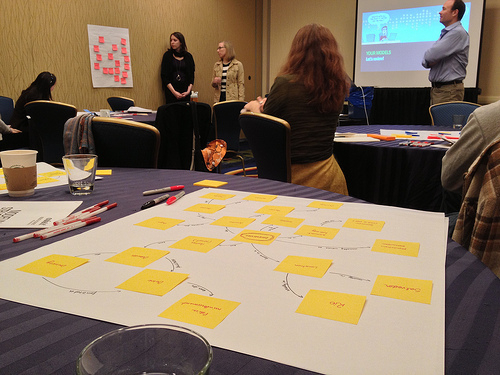

Secondly, (and a bit more prosaically) I drafted these notes in Scapple, an awfully interesting brainstorming program under development at Literature and Latte (the fine folks who make Scrivener). Scapple basically lets you enter free-form bits of text on a canvas of whatever size you choose. You can move the bits around to regroup them, and you can drag one text bit over another to relate the two with a dotted line. It was truly one of the best brainstorming experiences I've had on a computer. I ended up using the Scapple document as both my presenter notes and my presentation slide.

If you're like me and you're really not into the linear process of outlining, but you still want to do something like outlining on a computer, I encourage you to give Scapple a try. It's free for a limited time while in Beta. But watch out: you might get hooked.

A “clickable” world

Drew Olanoff suggests that Twitter could extend the hashtag idea to make "bits and pieces of data clickable." I'm not a fan of Twitter these days, but I love the idea of incorporating data structure into online content through simple affordances. Ah, so that’s Twitter’s strategy: A “clickable” world - The Next Web:

"By structuring data, Twitter could make its network of information easier to navigate and discover upon. It would also help the company structure its API so that third-party developers wouldn’t have to dig through every single tweet for particular information. I can click or tap around Wikipedia for hours, since everything is linked by its editors. Twitter could engage users in the same way."

A Smart Bike

This is why the future is in metadata.

Phil Windley gets us thinking about what a "smart bike" might do:

Imagine the bike being connected to its manufacturer, the bike store that sold it, and its owner. From its earliest point in being, the bike would be able to keep track of data about itself, things like its specifications, when it was made, and even the provenance of the materials used in its manufacture. The bike would keep track of inventory data like when it was delivered to the bike shop, who assembled it, its price, and when it was bought and by who. And all this would be possible with a personal cloud for a bike.

While Phil's vision could be accomplished without an on-board bike computer, it's hard to imagine a truly useful fire-and-forget system without one, or without a connected infrastructure at the important points of presence: the manufacturer, the store, the bike shop, etc. But this sort of automated administration would be useful for all sorts of things, and I don't see any technical hurdles standing in the way. It's just a matter of building the supporting schemas and software.

(This post was originally published on The Machine That Goes Ping on 5/26/12)

Information Architecture Defined

I ran across this definition of information architecture at IBM's developerWorks site and thought I'd share it here:

Design patterns for information architecture with DITA map domains

Information architectureInformation architecture can be summarized as the design discipline that organizes information and its navigation so an audience can acquire knowledge easily and efficiently. For instance, the information architecture of a Web site often provides a hierarchy of Web pages for drilling down from general to detailed information, different types of Web pages for different purposes such as news and documentation, and so on.

An information architecture is subliminal when it works well. The lack of information architecture is glaring when it works poorly. The user cannot find information or, even worse, cannot recognize or assimilate information when by chance it is encountered. You probably have experience with Web sites that are poorly organized or uneven in their approach, so that conventions learned in one part of the Web site have no application elsewhere. Extracting knowledge from such information resources is exhausting, and users quickly abandon the effort and seek the information elsewhere.

The same issues apply with equal force to other online information systems, such as help systems. The organization and navigation of the information has a dramatic impact on the user’s ability to acquire knowledge.

The Information Management Elevator Pitch Redux

I just came across some notes I took at this year's orientation for Mid-Career MSIMers. "The Question" came up, as it inevitably does in these kinds of gatherings: What is information management? Here are a few snippets I pulled from the answers back in September.

I just came across some notes I took at this year's orientation for Mid-Career MSIMers. "The Question" came up, as it inevitably does in these kinds of gatherings: What is information management? Here are a few snippets I pulled from the answers back in September.

Originally posted at MSIM 2011